Causation vs. Association: The Art of Not Getting Duped

From researchers to reporters, how exaggeration and misunderstanding turn weak studies into viral myths and thirsty headlines

TL;DR: How Science Gets Twisted & What You Need to Know

1️⃣ Correlation ≠ Causation – Just because two things happen together doesn’t mean one caused the other. Example: Ice cream sales and shark attacks both rise in summer—coincidence, not cause.

2️⃣ Media Loves Misleading Headlines – Words like “linked to,” “associated with,” and “tied to” are red flags. They suggest causation where none exists.

3️⃣ Studies Can Be Flawed – Small sample sizes, confounding variables, and biased funding often skew results. Most headlines don’t mention these details.

4️⃣ Cannabis & Heart Disease? – A recent study claimed a link but failed to control for smoking, diet, or stress—making the results questionable at best.

5️⃣ How to Protect Yourself – Don’t trust a single study. Look for absolute vs. relative risk, study replication, funding sources, and dose-response relationships before believing a claim.

Read on for the full breakdown.

The Causation Illusion: How Your Brain (and the Media) Trick You

You’ve seen the headlines. 📰

📢 “Eating Chocolate Linked to Higher Intelligence!”

📢 “Owning a Cat Comes with Increased Risk of Schizophrenia!”

📢 “Wearing Yellow Tied to Financial Success!”

Sounds compelling, right? Eye-catching, clickable, maddeningly misleading.

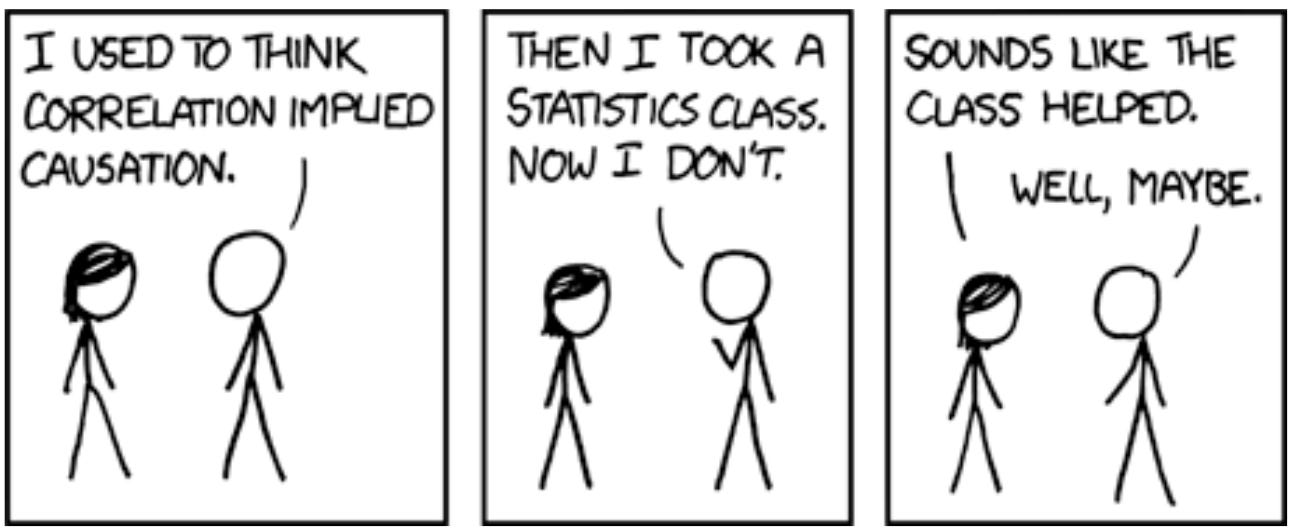

Welcome to the wild world of causation vs. association, where correlation—a statistical relationship between two things—masquerades as cause. And everyone from the media to your well-meaning Aunt Linda on Facebook is eager to tell you that one thing must be causing another because, well… just look at the numbers!

It’s an easy trap to fall into because our brains love simple explanations. We crave neat, cause-and-effect relationships. It rained because I washed my car. My phone slowed down because Apple wants me to buy a new one. More people are getting tattoos, and the economy is getting worse—coincidence? I think not.

But here’s the truth: most studies don’t prove causation. They rarely can. Most of what you read is just an association—two things happening at the same time, not one causing the other. But thanks to slippery phrasing and click-hungry headlines, we’re left thinking otherwise.

Let’s fix that.

Wait, What’s the Difference?

Causation:

Causation means A directly makes B happen. There’s a proven, repeatable, demonstrable mechanism at work.

Example: Drinking poison causes death. No arguments there. No “linked to” needed.

Example: Dropping your phone into a pot of boiling water causes it to break. (Sorry, no headline necessary.)

Association:

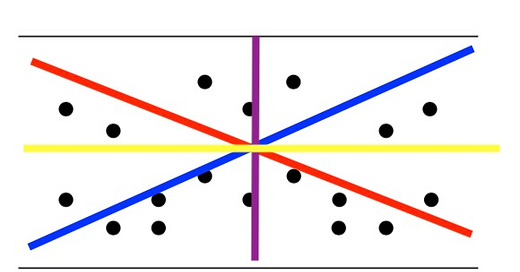

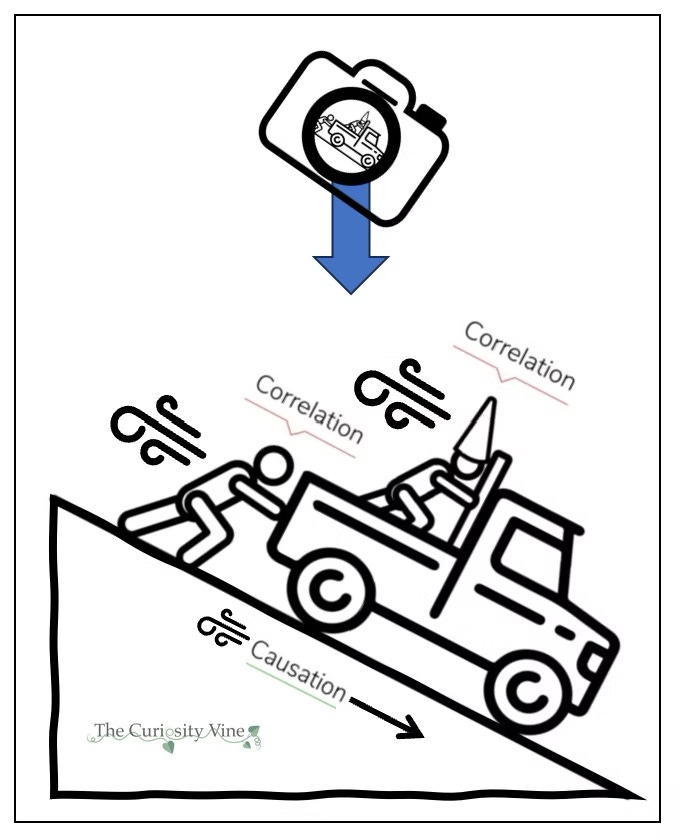

Association means A and B tend to happen together, but that doesn’t mean one causes the other. Maybe A causes B. Maybe B causes A. Maybe C causes both. Maybe they’re just statistical roommates, coexisting by pure coincidence.

Example: People who carry lighters are more likely to get lung cancer. But lighters don’t cause cancer—smoking does.

Example: People who own more books tend to be smarter. But buying books doesn’t make you a genius—reading them might, or maybe smart people just buy books.

Example: Ice cream sales and shark attacks both rise in the summer. Because it’s summer. Not because sharks have suddenly developed a craving for mint chocolate chip.

Correlation: A Statistic, Not a Cause

If sales of organic food and autism diagnoses both rise in the same decade, that’s a correlation. But one doesn’t cause the other—they just happen to be increasing at the same time. Yet, this kind of data is often twisted into misleading claims, especially when the media is involved.

👉 Example: Autism diagnoses have risen, and so has the number of childhood vaccines. Some people jumped to the conclusion: ‘More vaccines = more autism’

🚨 The reality?

1. Autism awareness and diagnostic criteria have changed, leading to more kids being identified—but that doesn’t mean autism itself is increasing.

2. Some children who would have previously been labeled as “developmentally delayed” are now correctly diagnosed with autism spectrum disorder (ASD).

3. The original study that falsely linked vaccines to autism was retracted, and further research found no connection—but the damage was already done.

4. People still believe the myth because misinformation spreads faster than corrections.

The Media’s Favorite Trick: Misleading Phrasing

The media knows causation sells but association is easier to find. So, what do they do? They fudge the language—just enough to imply causation without actually stating it outright.

Once you recognize the patterns, you’ll start seeing them everywhere. Here’s the cheat sheet for spotting the worst offenders in science reporting:

🚩 “Linked to” – Translation: We found two things happening at the same time, and we’re going to pretend they’re related!

– This was the phrase that helped fuel the vaccine-autism myth. A now-debunked study wrongly claimed a connection between vaccines and autism, and even though it was retracted, the “linked to” phrasing stuck.

🚩 “Comes with” – Translation: These things tend to exist together, but we have no clue why.

🚩 “Tied to” – Translation: We noticed a pattern and we’re gonna make it sound dramatic!

🚩 “Heavily correlated with” – Translation: Statisticians found a relationship, but that doesn’t mean one thing is making the other happen.

🚩 “Associated with” – Translation: These two things co-exist, but we’re not saying one causes the other (even though we really want you to think so).

Each of these phrases allows sensationalized headlines to suggest something far more dramatic than what the research actually found. Next time you see a headline throwing these phrases around, assume they’re twisting the data—because they probably are!

Case Study: The Cannabis & Heart Disease Study That Got Overhyped

Let’s take a real-world example: a 2025 study published in JACC: Advances examined whether cannabis use increases the risk of heart disease . [PDF to read it here]

Here’s what the study actually found:

🔹 They compared cannabis users and non-users who were already “relatively healthy” (no prior history of heart disease, high cholesterol, or diabetes).

🔹 Cannabis users showed a slightly higher risk of heart attack over several years. But here’s how the numbers were twisted:

• The ‘absolute risk increase’ was just 0.47%—less than half a percent.

• But the ‘relative risk’ looked big, which makes for scarier headlines.

It’s like saying, ‘Eating ice cream doubles your risk of shark attack!’ If the original risk was 1 in 10 million, doubling it still makes it only 2 in 10 million. The risk is real, but does it actually matter for more than just a headline?

🔹 The absolute risk increase was tiny. Even though the relative risk looked big, the actual increase was just 0.47% higher in cannabis users.

🔹 The study did not control for confounders like diet, exercise, stress, or other drug use. This means that cannabis users in the study may have been more likely to smoke cigarettes, have different stress levels, or eat differently—any of which could have contributed to the slightly increased heart disease risk. Without accounting for these factors, the study can’t isolate cannabis as the cause. Yet, that didn’t stop the headlines from claiming cannabis ‘causes’ heart attacks

🚨 The Reality: This study showed a weak association, not causation. But how do you think the media reported it?

📢 “Smoking Weed Causes Heart Attacks, Study Finds!”

📢 “New Research Reveals Major Cardiovascular Risks of Cannabis!”

In reality? The study was observational, didn’t prove causation, and had major confounders. But none of that made the headlines.

…BUT… Perspective Matters!

Don’t Lose Sight: The Science Isn’t Always as Pure as It Seems

Let’s get real: researchers are not neutral robots dedicated to a holy pursuit of absolute truth. They are people—highly trained, highly ambitious, and very aware of the impact of their findings. Many of them want their research to be noticed, cited, and talked about. Most of them also enjoy being continuously employed. So what happens?

🔹 They frame their results in ways that sound as profound as possible.

🔹 They highlight connections that seem significant—even if causation isn’t proven.

🔹 They may overstate implications, knowing that funding, prestige, and career opportunities can hinge on the perception that their research is groundbreaking.

And if scientific researchers—who are supposed to be methodical, critical, and cautious—can get carried away in how they present their data, imagine what happens when that data gets handed off to journalists.

One year eggs are deadly, the next they’re a superfood. This cycle repeats with fat, sugar, salt, and coffee—because most of these claims come from weak correlations, NOT causation

Sound familiar? The same scientific flip-flopping happens with cannabis, vitamin D, cholesterol, red meat, exercise, fasting, carbs—you name it. If you follow science news, it’s impossible to avoid the whiplash of ever-changing trends crashing into each other.

Science evolves, but headlines often make it seem like research is constantly contradicting itself—when in reality, the details matter most.

If you want information that will stand the test of time, you have to read with skeptical glasses on. If you don’t, you’re likely going to fall prey to the confirmation bias I wrote about last time, and be lulled into lapping up what supports your preexisting views, and makes you feel like you’re perfect just the way you are.

Or, at the very least, align yourself with someone who has the nerd goggles firmly strapped on—someone who will sift through the noise, read between the lines, and tell you when the hype is real and when it’s just another round of scientific hot potato.

That’s me. And that’s one of the points of this newsletter, for a least this small community to share skepticism and educated critical reading with others who aren’t sheep to be duped.

So the next time you see a “New Study Finds [Insert Scary Thing Here] Tied to [Insert Common Thing Here]” headline, take a deep breath. Ask:

🔹 Did this study actually prove causation, or just an association?

🔹 Who was studied? Were they lifelong heavy users or casual consumers?

🔹 Are there other confounding factors that could explain the findings?

Chances are, the headline isn’t telling the whole story.

And in the world of health, science, and cannabis research, that makes all the difference.

Related Reading | Nature Publication | Catalogue of Bias | Intro to Data Science

Mastering the Science of Causation—How Not to Be Duped

When Correlation Starts to Look Like Causation

The next half of this post is not for the casual reader. This is for those who want to think like a scientist, avoid getting misled by research hype, and truly understand how causation is determined in the real world.

It’s one thing to know that correlation isn’t causation—it’s another to spot the tricks, understand the deeper science, and cut through the noise like a pro.

If you’ve made it this far, congratulations. You’re better armed than most clickbait readers these days.

The goal so far has been to break down how science gets misrepresented. But… how do researchers actually determine when something is truly causal? Now we move beyond the media tricks and dive into the real science of causation. What follows is RED pill territory:

By now, we’ve seen how association can masquerade as causation, and how the media twists that confusion into clickable but misleading headlines. But does that mean we can never identify causation? Not at all.

Some research findings do point to a true cause-effect relationship—so long as we evaluate them with the right criteria. This is where epidemiology, critical thinking, and scientific rigor come in.

The Red Flags: How Researchers Signal (or Fake) Causation

A study isn’t automatically worth believing just because it appears in a peer-reviewed journal. Many studies are designed to look more impressive than they actually are—often in subtle, strategic ways.

Here’s what makes a study’s findings seem more profound than they really are:

1. The Strength of Association is Exaggerated

A legitimate causal relationship usually shows a clear, strong link—like smoking and lung cancer. The risk isn’t just slightly higher; it’s dramatically higher. But a weak correlation (like “Cannabis Linked to Slightly Increased Risk of Heart Disease”) can be framed as alarming, even if the effect is minuscule (remember above, “if the original risk was 1 in 10 million, doubling it still makes it only 2 in 10 million.”)

🚩 Red Flag: If the risk increase is less than 50%, it’s usually statistical noise or confounded by other factors.

2. The Study Has Never Been Reproduced

Real science relies on replication. If only one study ever found a link, but no one else has been able to replicate it, it’s probably just noise in the data. Yet, that one study can still become a media sensation.

🚩 Red Flag: If no follow-up studies confirm the findings, be skeptical.

3. The Timing is Backwards (Reverse Causation)

If A causes B, then A must come before B. But many studies fail to prove that order.

Example?

🚩 A study claims that cannabis use causes depression—but if depressed people were more likely to use cannabis in the first place, then the causation might be reversed.

Many cannabis studies suffer from this exact flaw, but it doesn’t stop them from making bold claims.

4. No Dose-Response Relationship

A true causal effect usually follows a dose-response curve—the more exposure, the greater the effect.

🚩 If a study finds that both heavy and light cannabis users have the same schizophrenia risk, that contradicts the idea that cannabis itself is causing the issue. Without a dose-response curve, causation is less convincing.

🤔 Current evidence suggests that high and frequent doses of THC may increase the risk of schizophrenia. However, for the vast majority of consumers using low to moderate doses, the risk appears to be negligible.

5. The Study is Based on Self-Reported Data

Many studies on cannabis rely on surveys—where participants self-report their cannabis use, their mental health, and their habits.

🚩 People lie. Or forget. Or misremember details. Or just want to get through the time-wasting questionnaire. they’re given. Self-reported data is notoriously unreliable, yet many studies treat it as hard science. And, most of the cannabis research we have relies on self-report.

Example?

🚩 A study claims that people who smoke cannabis daily have lower IQs. But… are they actually smoking daily, or just guessing when asked? One step further: who is ever smoking the same exact products every day, and in the same way?

6. No Mechanism is Explained

Even if a study finds a strong statistical association, causation is far more believable when there’s a known biological mechanism.

🚩 If a study claims eating bacon causes violent behavior, but there’s no biochemical explanation, it’s probably just statistical noise.

Want to discuss this with other like-minded readers? 👇

Take A Deeper Look At The Game of Scientists At Work.

We expect journalists to sensationalize research, but here’s what most people don’t realize:

🔹 Scientists are also under great pressure to make their studies sound more important than they really are. Now more than ever before (looking at you, DOGE)

🔹 Getting published in a top journal requires novelty, drama, and big claims.

🔹 Null findings (no significant links) rarely get published—even though they are just as scientifically important as studies that do find a link. This is known as the “file drawer effect”—because studies that find no dramatic results often get tucked away in the research equivalent of a bottom drawer, never seeing the light of day. As a result, the published literature is biased toward studies that report strong correlations, making it seem like links are everywhere, when in reality, many studies that found no connection simply never made it to press.

So what happens?

Researchers frame their findings to look more significant—usually not by faking data, but by choosing words carefully:

Instead of: “We found no strong evidence linking cannabis to schizophrenia.”

They write: “Our findings suggest a possible connection that warrants further investigation.”

See the difference? One is honest. The other keeps the door open for misinterpretation.

And once that study lands in a journalist’s hands… well, you know what happens next.

How to Read Science Without Getting Scammed

Want to cut through the noise and actually understand if a study is legitimate? Here’s how:

🔹 Ignore the Headlines – Journalists sensationalize everything. Don’t trust them.

🔹 Find the Original Study – Don’t just read an article. Go to the source.

🔹 Look for Confounding Variables – Ask: Did they actually control for other factors that could explain the association?

🔹 Check for a Dose-Response Relationship – If more exposure doesn’t lead to more effect, it’s probably not causal.

🔹 See If the Study Has Been Repeated – If no one else has replicated it, be skeptical.

🔹 Ask If It Even Makes Sense – If a study says, “Drinking green tea prevents heart disease,” but there’s no biological explanation, question it.

🔹 Trust Nerds With Strong BS Detectors – If you don’t have time to dissect research, align yourself with someone who does.

Causation: How Do We Actually Prove It?

Science is obsessed with causation—because it’s the only thing that really matters. Finding an association between two things is easy—proving one actually causes the other? That’s the holy grail.

Here’s how real causation is determined (and how you can tell if a study is actually onto something—or just hyping a correlation).

1. Causal Inference: The Hardest Part of Science

To say A causes B, we need to prove that:

✔ A comes first. (No reverse causation.)

✔ More A leads to more B. (A dose-response relationship.)

✔ Other variables aren’t responsible. (No confounding factors.)

✔ Removing A makes B disappear. (Experimental evidence.)

✔ There’s a logical reason why A would cause B. (Biological plausibility.)

If a study doesn’t meet these standards, it’s not proving causation—it’s just pointing out a pattern.

2. The Causation Pyramid: Ranking Scientific Evidence

Not all studies are created equal. Some barely tell us anything, while others come close to proving causation.

Here’s how to rank research quality from weakest to strongest:

🟥 Case Reports & Observational Studies (Weakest)

• Example: “We found 100 people who use cannabis and noticed some had heart issues.”

• 🚩 Lots of confounding variables. No proof of causation.

🟧 Cross-Sectional & Cohort Studies

• Example: “We followed 10,000 people for 10 years and saw cannabis users had slightly higher rates of heart disease.”

• 🚩 Better than case reports, but still just an association.

🟨 Longitudinal Studies

• Example: “We tracked cannabis use before heart issues developed to confirm timing.”

• 🚩 Better control of causation, but still missing key proof.

🟩 Randomized Controlled Trials (RCTs) (Strongest)

• Example: “We randomly assigned 1,000 people to use cannabis and 1,000 people not to, then measured heart disease rates.”

• ✅ Now we’re talking! This is real science, but still not perfect.

🟦 Systematic Reviews & Meta-Analyses (Gold Standard)

• Example: “We analyzed 50 high-quality studies and found consistent results across all of them.”

• ✅ This is the best type of evidence—but only if the included studies were well done.

Most cannabis studies are observational, meaning they cannot prove causation—but that won’t stop the media from pretending they can.

3. The “Cause Mirage”: How Science Can Trick You

Even strong studies can be misleading. Here’s how causation can still be faked—even when a study looks rigorous:

🚩 The Hidden Third Factor (Confounding) – Example: “Coffee drinkers live longer.”

➕ Maybe coffee doesn’t make you live longer—maybe coffee drinkers exercise more, eat better, or have other habits that increase lifespan.

⚠️ Some studies have found that autistic children have higher levels of inflammation in the brain. This has led some people to wrongly assume that inflammation must be the cause of autism (and remember, the point of vaccines is to cause an immune response = inflammation reaction. There is tempting logic there, although an incomplete picture ). But correlation does not mean causation—autism could lead to immune changes, or both autism and inflammation could stem from a deeper underlying factor like genetics or environmental exposures.

🚩 The Timing Trick (Reverse Causation) – Example: “Cannabis use is linked to schizophrenia.”

➕ What if people predisposed to schizophrenia are more likely to use cannabis? That would mean schizophrenia risk is driving cannabis use—not the other way around. Many people struggling with mental health face a treatment system that fails to meet their needs. Seeking their own solutions—like exercise, diet, and sleep—is not only adaptive but actively encouraged by medical professionals. Yet, when individuals turn to cannabis for relief and find it effective, research will inevitably frame this as cannabis being associated with mental health disorders, rather than recognizing it as a response to inadequate care.

🚩 The “Just a Little Bit” Effect (Small Sample Size) – Example: “We found 15% higher risk of heart disease in cannabis users.”

➕ That sounds significant, but if the actual increase is 0.1% to 0.12%, that’s not enough to mean anything. At least to the wise group of readers who look beyond the headlines.

🚩 The “Not Really Significant” Trick (P-Hacking) – Example: “Cannabis increases cancer risk (p=0.049).”

➕ The magic p<0.05 threshold means “statistical significance”, but many results barely make the cut and may not be repeatable.

🚩 The Selective Reporting Game – Example: “7 out of 10 studies show cannabis is harmful.”

➕ What if 30 studies found no harm, but those weren’t published? This is called publication bias, and it happens all the time.

4. The Final Test: When Should You Actually Believe a Study?

When you see a new “science says” headline, don’t just believe it—run it through this Causation Checklist:

🔲 Is it an observational study, or an actual experiment?

🔲 Did they control for confounding variables?

🔲 Did they show a dose-response relationship?

🔲 Did they prove that A came before B?

🔲 Was it replicated in multiple studies?

🔲 Does it actually make biological sense?

🔲 Is the effect size large enough to matter?

🔲 Are journalists hyping it up, or is it really solid science?

🔲 Has the study been debunked? Some studies get retracted after new evidence proves them false—but once a claim spreads, it’s hard to erase. The best example? The vaccine-autism myth. The original study was retracted decades ago, but misinformation lingers, proving that science’s biggest enemy isn’t just bad research—it’s what people choose to believe long after the science has moved on

If a study fails even one of these tests, be skeptical.

🔲 Does the research match the clincal reality?

One unique opportunity—often overlooked in research—is the intersection of clinical reality and scientific findings. Healthcare providers witness conditions unfold in real time, offering a raw, unfiltered perspective on how exposures and outcomes play out in daily life. While this view has its limitations—since only certain individuals seek medical care, creating a built-in selection bias—high patient volume can reveal patterns that research alone might miss.

This is one of the key advantages of CED Clinic’s approach to cannabis care. With thousands of patients under ongoing care and longitudinal tracking, we have a unique opportunity to observe how cannabis impacts health in real-world settings, complementing the insights gained from lab studies and clinical trials.

Summing it Up: The Relentless Pursuit of Truth

The world is drowning in bad science, misleading media, and half-baked conclusions. Sensationalized headlines push overhyped findings, and even peer-reviewed research isn’t immune from bias, spin, and selective reporting.

If you want real, future-proof information, you have to look beyond the headlines, beyond single studies, and deep into the logic, methodology, and motivations behind the research.

That takes time, patience, and a relentless commitment to the truth.

Most people won’t do that work—they’ll take the easy answers, the bold claims, the viral soundbites.

But you will.

Because you’re still reading.

And if you stick with people who actually care about truth—not just clicks, ideology, or attention—you’ll always be ahead of the game.

🔹 Truth isn’t a quick fix.

🔹 It’s not a single study.

🔹 It’s the patient, skeptical search for patterns that hold up over time.

Want truth that stands the test of time? You won’t find it in single studies, media hype, or the latest health scare du jour.

It’s in:

✔ Recognizing patterns across years of research—not cherry-picking single studies.

✔ Skeptical analysis of funding, biases, and methodology.

✔ Looking beyond what’s being said, to why it’s being said.

So next time you see “New Study Finds…”, don’t just take the bait.

Ask the harder questions.

Next time you see a ‘Science Says’ headline, don’t just take the bait.

🚨 Stop. Ask yourself:

🤔 Does the study prove causation or just an association?

🤑 Who funded it? Who benefits from the conclusion?

🫣 What’s the absolute risk vs. relative risk?

😶🌫️ Has the study been debunked, or is it part of the ‘file drawer effect’?

Most people won’t bother to ask these questions. They’ll just share the clickbait headline and move on.

But if you’ve made it this far, you’re different. You’re someone who thinks from top to bottom and probably thinks before believing. That’s what separates hype chasers from truth seekers.

And if you stick with people who actually *care about truth—not just clicks, ideology, or attention—you’ll always be ahead of the game.

For an even deeper dive, The Doctor-Approved Cannabis Handbook explores bias in cannabis in even greater detail. The book encourages readers to critically evaluate claims and look for well-designed clinical trials rather than relying solely on personal experiences or observational studies.

Causation vs. Correlation

Page 102: This is in the section where I break down how to interpret cannabis research responsibly.

“Just because two things happen together—such as cannabis use and symptom improvement—does not mean one caused the other. This is a common mistake in both media reports and personal testimonials.”

Page 104: You address how media headlines can misrepresent cannabis studies, leading people to assume causal relationships where none exist.

“A study might find that people who use cannabis report lower levels of anxiety, but that doesn’t necessarily mean cannabis caused the reduction—it could be that people who are naturally less anxious are more likely to use cannabis.”

Confirmation Bias

Page 55: I discuss how patients and even some healthcare providers may unconsciously focus on positive experiences with cannabis while overlooking studies that show mixed or negative results.

“Many people approach cannabis with strong beliefs—either positive or negative. This can lead to confirmation bias, where we selectively notice and remember information that supports what we already think.”

Page 57: You discuss how people who believe cannabis is a “miracle cure” may only seek out success stories while ignoring studies that show mixed results or potential side effects.

“If someone is convinced that cannabis cures insomnia, they might only remember the nights when it helped them sleep while forgetting the times it didn’t.”